In this article, we will be looking into Links and Images, and the various scenarios, that can be handled using Selenium.

1. Accessing Links using Link Text and Partial Link Text

The easiest way to access links on a web page is using the locators’ linkText and partialLinkText. LinkText matches the entire text to find the element, whereas PartialLinkText uses the partial text to match the element.

1 2 | //HTML Code <a href="http://www.testersdock.com">Click Here</a> |

1 2 3 | //Selenium Code WebElement linkTextEle= driver.findElement(By.linkText("Click Here")); WebElement partialEle= driver.findElement(By.PartialLinkText("Here")); |

Two things to keep in mind:

1(a). If there are more than one link texts with the same name, the above locators will identify the first occurrence only.

1 2 3 4 | //HTML Code <a href="http://www.google.com">Click Here</a> <a href="http://www.bing.com">Click Here</a> <a href="http://www.yahoo.com">Click Here</a> |

1 2 3 | //Selenium Code WebElement linkTextEle= driver.findElement(By.linkText("Click Here")); WebElement partiallinkTextEle= driver.findElement(By.PartialLinkText("Here")); |

In the above example, only the first Click Here for google.com will be identified.

1(b). The parameters for both locators are case sensitive, meaning Click Here and click here are considered two unique entities.

1 2 3 | //HTML Code <a href="http://www.google.com">Click Here</a> <a href="http://www.bing.com">click here</a> |

In the above example, we can access both the links at once, using the case-sensitivity property.

1 2 3 | //Selenium Code WebElement linkTextEle= driver.findElement(By.linkText("Click Here")); WebElement partialEle= driver.findElement(By.PartialLinkText("here")); |

2. Finding links on a web page

To capture all the links on a web page, we will first store all the ‘a’ tags in an array list and print them using an iterator.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 | import java.util.Iterator; import java.util.List; import org.openqa.selenium.By; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; public class AllLinks { public static void main(String[] args) { String url; // Telling Selenium to find Chrome Driver System.setProperty("webdriver.chrome.driver", "C:\\selenium\\chromedriver.exe"); // Initialize browser ChromeDriver driver = new ChromeDriver(); // Launch Yahoo driver.get("http://yahoo.com/"); // Store all links with the 'a' tag in a Array List List Alllinks = driver.findElements(By.tagName("a")); // Iterate the Array List Iterator iterate = Alllinks.iterator(); while (iterate.hasNext()) { // Print the URL url = iterate.next().getAttribute("href"); System.out.println(url); } // Close the browser driver.quit(); } |

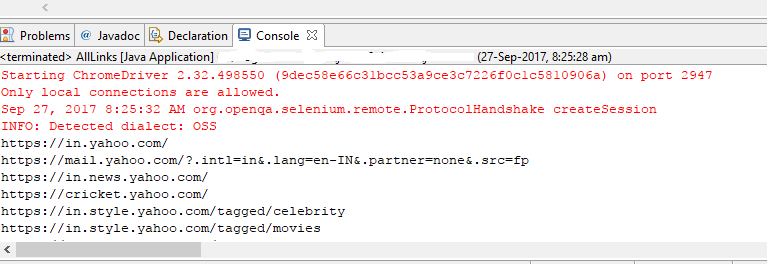

We should get an output like this:

3. Finding Broken links

To check whether a URL is working fine or broken, we need to have its Http Status Codes. HTTP status codes are response codes given by servers, which helps in identifying the cause of the errors.

| HTTP Status Codes | Significance |

|---|---|

| 1xx (Informational) | The response indicates that the request was received and understood. |

| 2xx (Success) | The response indicates the action requested by the client was received, understood, accepted, and processed successfully. |

| 3xx (Redirection) | The response indicates the client must take additional action to complete the request. |

| 4xx (Client Error) | The response is intended for situations in which the error seems to have been caused by the client. |

| 5xx (Server Error) | The response is received when a server fails to fulfill an apparently valid request. |

As we can see in the above table, any links for which the response code is 2xx is a valid link. So using getResponseCode() method we will first identify the HTTP response code for each link. The getResponseCode() method returns two values: 200 (for valid links) and 401 (for invalid links). Now, if a link has a response code of 200, then that link will be considered as valid, and the rest will be considered as invalid or broken.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 | import java.net.HttpURLConnection; import java.net.URL; import java.util.List; import javax.net.ssl.HttpsURLConnection; import org.openqa.selenium.By; import org.openqa.selenium.WebDriver; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; import org.openqa.selenium.firefox.FirefoxDriver; public class BrokenLinks { public static void main(String[] args) { // Telling Selenium to find Chrome Driver System.setProperty("webdriver.chrome.driver", "C:\\selenium\\chromedriver.exe"); // Initialize browser ChromeDriver driver = new ChromeDriver(); // Maximize Browser Window driver.manage().window().maximize(); // Launch Google driver.get("https://www.google.co.in/"); // Storing all the 'a' tags in a Array List List links = driver.findElements(By.tagName("a")); //Displaying the total number of links in the Webpage System.out.println("Total links are " + links.size()); for (int i = 0; i <= links.size(); i++) { try { // Iterating through the array list and getting the URL's String nextHref = links.get(i).getAttribute("href"); // Getting the Response Code for URL URL url = new URL(nextHref); HttpURLConnection connection = (HttpURLConnection) url.openConnection(); connection.setRequestMethod("GET"); connection.connect(); int code = connection.getResponseCode(); // Condition to check whether the URL is valid or Invalid if (code == 200) System.out.println("Valid Link:" + nextHref); else System.out.println("INVALID Link:" + nextHref); } catch (Exception e) { System.out.println(e.getMessage()); } } driver.quit(); } } |

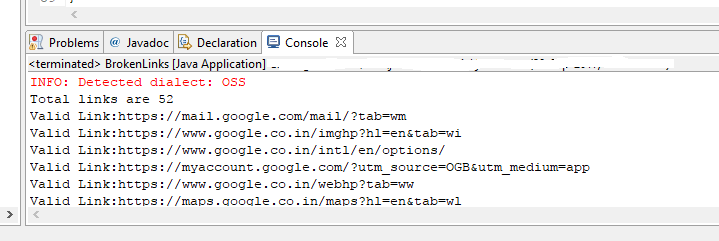

On executing it, we should get a list of URLs along with its status as Valid or Invalid.

4. Finding Broken Images

An image tag looks like this: <img src=”link”></img>. So first, we will search all the images using the img tag and then will fetch the image URL using the src tag. After that, as mentioned above, we will look for 200 statuses for valid images and 401 for invalid images.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 | import java.net.HttpURLConnection; import java.net.URL; import java.util.List; import javax.net.ssl.HttpsURLConnection; import org.openqa.selenium.By; import org.openqa.selenium.WebDriver; import org.openqa.selenium.WebElement; import org.openqa.selenium.chrome.ChromeDriver; import org.openqa.selenium.firefox.FirefoxDriver; public class BrokenImages { public static void main(String[] args) { // Telling Selenium to find Chrome Driver System.setProperty("webdriver.chrome.driver", "C:\\selenium\\chromedriver.exe"); // Initialize browser ChromeDriver driver = new ChromeDriver(); // Maximize Browser Window driver.manage().window().maximize(); // Launch Pixabay driver.get("https://www.pixabay.com/"); // Storing all the 'img' tags in a Array List List links = driver.findElements(By.tagName("img")); // Displaying the total number of links in the Webpage System.out.println("Total links are " + links.size()); for (int i = 0; i <= links.size(); i++) { try { // Iterating through the array list and getting the URL's String nextHref = links.get(i).getAttribute("src"); // Getting the Response Code for URL URL url = new URL(nextHref); HttpURLConnection connection = (HttpURLConnection) url.openConnection(); connection.setRequestMethod("GET"); connection.connect(); int code = connection.getResponseCode(); // Condition to check whether the URL is valid or Invalid if (code == 200) { System.out.println("Valid Image:" + nextHref); } else { System.out.println("INVALID Image:" + nextHref); } } catch (Exception e) { System.out.println(e.getMessage()); } } // Close the browser driver.quit(); } } |

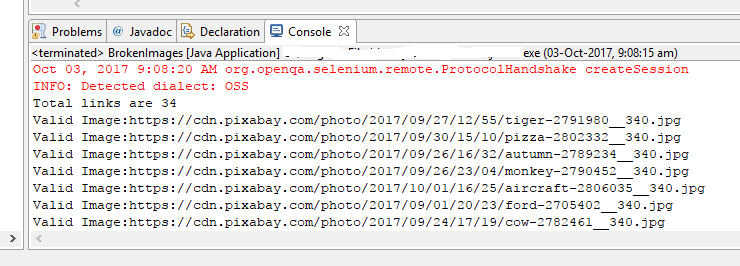

After Execution, we should get a list of valid/invalid URLs.

Well written

Thanks Tuhina for your comment.

Well written and Explained. Thanks for the article

Thanks for the Kind Words Tajeshwar 🙂

thanks to the author for taking his clock time on this one.

Very well explained